OpenAI has revolutionized the AI landscape with an ever-growing portfolio of products that extend far beyond the familiar ChatGPT. For businesses, developers, and individual users looking to unlock the potential of these AI solutions, understanding the latest pricing models and optimization strategies is key to maximizing return on investment (ROI). This in-depth guide delves into OpenAI’s current pricing across its primary products, compares them with leading competitors, and offers actionable insights for optimizing your AI expenditures.

At the outset, it’s important to distinguish between the mainstream ChatGPT product—used by over 400 million users daily—and the OpenAI API offerings, which are primarily tailored for developers. While ChatGPT is designed for a broad consumer base, OpenAI’s APIs cater to developers creating new products from the ground up at speeds previously unimaginable or enhancing existing products with cutting-edge AI capabilities. Understanding this distinction is crucial for determining the best fit for your AI-driven needs.

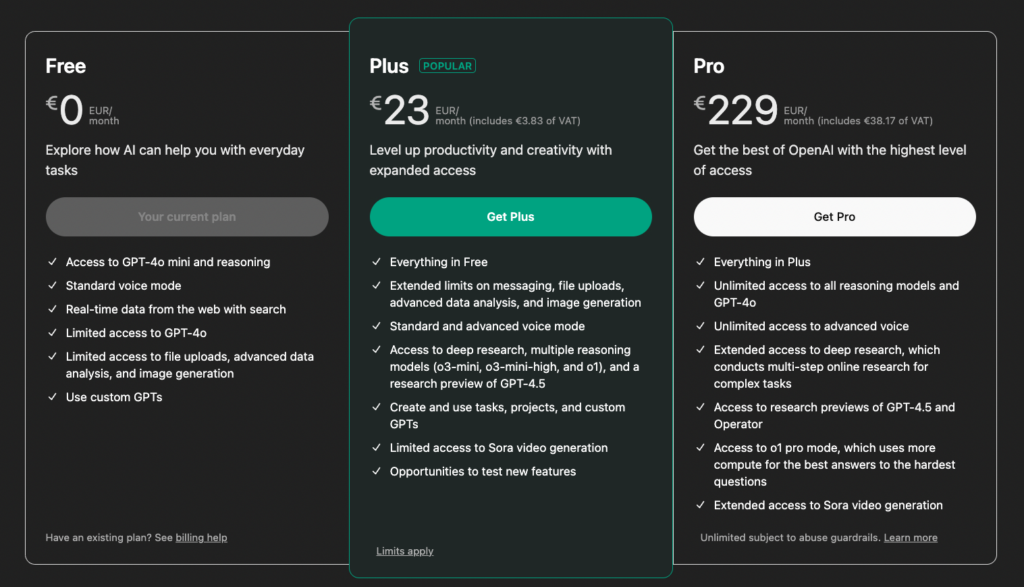

ChatGPT Plans and Pricing

ChatGPT is designed for all users, providing an interactive chat where you can ask questions and receive AI-generated responses. Its pricing follows a simple SaaS-style model, making it easy to understand. ChatGPT’s tiered pricing structure addresses different user needs and usage patterns. First let’s see the pricing plans for ChatGPT

Free Plan (€0/month):

- Access to GPT-4o mini and basic features.

Plus Plan (€23/month, incl. VAT):

- Enhanced access with extended limits on messaging, file uploads, advanced data analysis, and image generation.

Pro Plan (€229/month, incl. VAT):

- Unlimited access to all reasoning models, GPT-4o, advanced voice features, and additional advanced functionalities.

Team Plan:

- Designed for teams of 2 or more users.

- Priced at $30 per user/month when billed monthly, or $25 per user/month when billed annually.

OpenAI API pricing for the main products

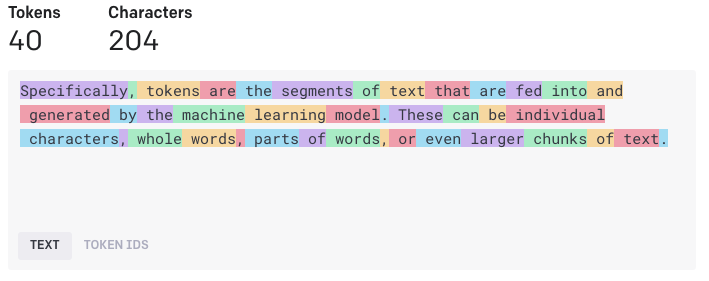

Understanding Tokens: The Basis of OpenAI Pricing

Before diving into specific pricing details, it’s essential to understand what tokens are and how they impact costs when using OpenAI’s services.

Tokens are the fundamental units of text that language models process. Rather than counting whole words, OpenAI models break text into smaller pieces called tokens:

- A token represents approximately 4 characters of text in English

- For English text, 1 token equals roughly ¾ of a word on average

- A 1-page document (about 500 words) typically requires approximately 650-750 tokens

- Punctuation, spaces, and formatting characters also count as tokens

- Code and non-English languages may use more tokens per word

How Token Pricing Works

OpenAI’s pricing model is primarily based on token usage, with different costs for:

- Input tokens: Text you send to the model (your prompts or content to process)

- Output tokens: Text generated by the model (its responses)

For example, if you send a 100-word prompt (approximately 130 tokens) to GPT-4o and receive a 300-word response (approximately 400 tokens), you would be charged:

- 130 input tokens × $0.005 per 1,000 tokens = $0.00065

- 400 output tokens × $0.015 per 1,000 tokens = $0.006

- Total cost: $0.00665

Context Windows and Token Limits

The “context window” refers to how much text a model can consider at once:

- Larger context windows allow processing more extensive documents or longer conversations

- Each model has a maximum token limit (e.g., GPT-4o can process up to 128,000 tokens at once)

- The entire conversation history, including system messages, user inputs, and model responses, must fit within this token limit

Understanding tokens is critical for both cost management and effective use of OpenAI’s models, as it directly impacts pricing and determines how much information you can process in a single interaction.

The different API products of OpenAI

OpenAI offers a range of AI-powered APIs designed for different use cases, including text generation, image creation, speech processing, and AI-powered search tools. These APIs provide powerful capabilities for developers, businesses, and researchers looking to integrate AI into their products. The pricing of each API varies based on complexity, performance, and usage.

At the core of OpenAI’s offering are GPT models, which handle text-based tasks such as chatbots, content creation, and coding assistance. For those looking to generate AI-powered images, OpenAI provides DALL·E, a model that creates high-quality visuals from text prompts. Businesses needing speech recognition or text-to-speech capabilities can leverage models like Whisper and GPT-4o Transcribe, while Web Search and Embeddings enhance AI applications by providing real-time information retrieval and better contextual understanding.

Now, let’s take a closer look at each category of OpenAI’s API products and their pricing.

OpenAI API pricing for the various APIs

| Model | Use Case | Cost per 1M Input Tokens | Cost per 1M Output Tokens |

|---|---|---|---|

| GPT-4.5 Preview | Most advanced | $75 | $150 |

| GPT-4o | High performance, low cost | $2.50 | $10 |

| GPT-4o-Mini | Budget-friendly | $0.15 | $0.60 |

| GPT-3.5 Turbo | Cheapest chat model | $0.50 | $1.50 |

| Whisper | Transcription | $0.006 per minute | – |

| DALL·E 3 | Image generation | $0.04 per image | – |

For the complete table of OpenAI API prices, you can visit the official OpenAI pricing page.

1. GPT Models – AI-Powered Text Generation

GPT models are OpenAI’s flagship AI products, designed for text generation, natural language understanding, and conversation-based applications. These models can write articles, summarize content, translate text, generate code, and power AI chatbots.

GPT-4o Pricing

- GPT-4o (2024-08-06): $2.50 per 1M input tokens, $1.25 for cached input, and $10.00 per 1M output tokens.

- GPT-4o Mini: $0.15 per 1M input tokens, $0.075 for cached input, and $0.60 per 1M output tokens.

- GPT-4.5 Preview: A more advanced model at a premium price of $75.00 per 1M input tokens and $150.00 per 1M output tokens.

For companies with high-volume text generation needs, OpenAI offers a batch API at discounted rates.

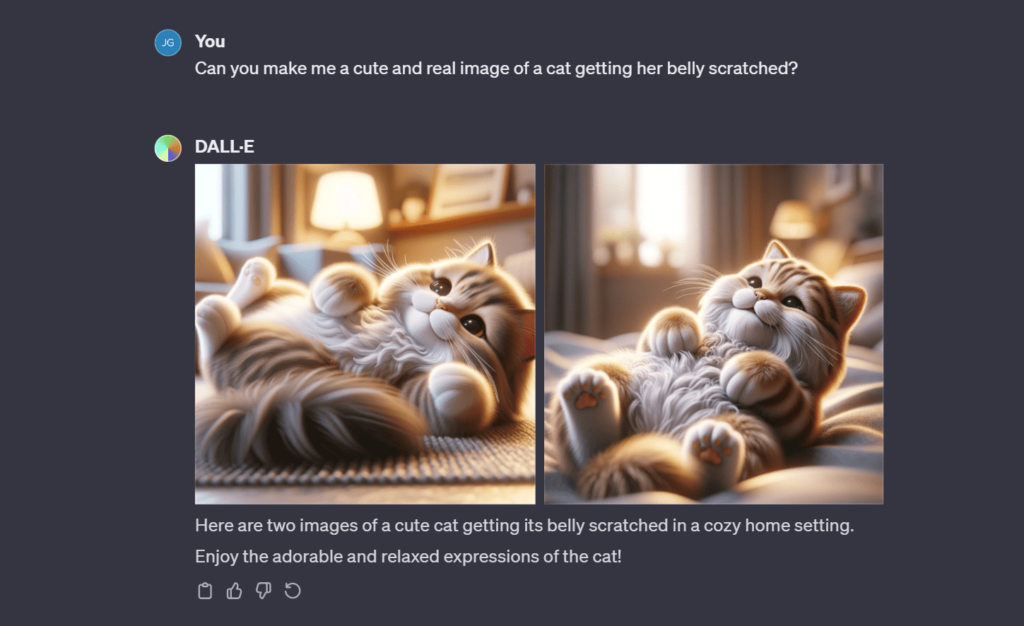

2. DALL·E – AI Image Generation

DALL·E is OpenAI’s solution for generating images from text descriptions. It’s commonly used for marketing, design, content creation, and AI-assisted art.

DALL·E Pricing

- DALL·E 3 (Standard): $0.04 per image (1024×1024) and $0.08 (1024×1792).

- DALL·E 3 (HD): $0.08 per image (1024×1024) and $0.12 (1024×1792).

- DALL·E 2: More budget-friendly, with prices ranging from $0.016 to $0.02 per image depending on resolution.

3. Whisper & GPT-4o Transcribe – Speech Recognition and Audio Processing

OpenAI provides speech-to-text and text-to-speech models, making it easy to convert spoken words into text or generate natural-sounding speech.

Transcription Pricing

- Whisper: $0.006 per minute.

- GPT-4o Transcribe: $2.50 per 1M input tokens, $10.00 per 1M output tokens, or $0.006 per minute.

- GPT-4o Mini Transcribe: A more affordable option at $1.25 per 1M input tokens, $5.00 per 1M output tokens, or $0.003 per minute.

Text-to-Speech (TTS) Pricing

- TTS: $15.00 per 1M characters.

- TTS HD: $30.00 per 1M characters.

- GPT-4o Mini TTS: $0.60 per 1M input tokens and $0.015 per minute.

4. Web Search & Embeddings – AI-Powered Information Retrieval

To enhance AI applications, OpenAI offers Web Search and Embeddings, which enable models to retrieve and understand relevant information in real time.

Web Search Pricing

- GPT-4o Search Preview: Starts at $30.00 per 1,000 searches (low context size) and increases to $50.00 for high context size.

- GPT-4o Mini Search Preview: Starts at $25.00 per 1,000 searches.

Embeddings Pricing

- Text-Embedding-3 Small: $0.02 per 1M tokens.

- Text-Embedding-3 Large: $0.13 per 1M tokens.

5. Fine-Tuning for Custom AI Models

For businesses looking to customize AI models for specific tasks, OpenAI provides fine-tuning options.

Fine-Tuning Pricing

- GPT-4o: Training at $25.00 per 1M tokens, input processing at $3.75, and output at $15.00.

- GPT-3.5 Turbo: Training at $8.00 per 1M tokens, input at $3.00, and output at $6.00.

- Legacy models like Davinci-002 and Babbage-002: More affordable but less advanced options.

6. Built-In AI Tools and Additional Services

OpenAI also offers additional AI tools that enhance functionality.

Additional Tool Pricing

- Code Interpreter: $0.03 per session.

- File Search Storage: $0.10 per GB per day (first 1GB free).

- Web Search Tool Calls: $2.50 per 1,000 calls (excludes Assistants API usage).

Choosing the Right OpenAI API for Your Needs

Each of OpenAI’s APIs is designed for specific use cases, with pricing that reflects their performance and complexity. Whether you’re looking for text generation, image creation, transcription, or AI-powered search, OpenAI offers a variety of models to fit different needs and budgets.

For businesses with high-volume needs, OpenAI provides discounted rates for batch processing and fine-tuning, making it easier to scale AI applications efficiently. By understanding the pricing structure, you can select the best model for your use case while optimizing costs.

With the rapid evolution of AI, OpenAI continues to enhance its APIs, making them more powerful and accessible for developers worldwide. If you’re looking to integrate AI into your application, these pricing details will help you make an informed decision.

Competitor Pricing Comparison

Understanding how OpenAI’s pricing compares to other leading AI providers helps in making informed decisions:

| Service | OpenAI (GPT-4o) | Anthropic (Claude 3) | Mistral | Google Gemini |

|---|---|---|---|---|

| Chat Model (per M Token) | Input: $5 Output: $15 | Haiku: $0.25/$1.25 Sonnet: $3/$15 Opus: $15/$75 | Small: $2 Large: $8 | 1.5 Pro: $7/$21 |

| Image Generation | DALL-E 3: $0.04 per 1024×1024 image | Not Available | Not Available | Gemini Pro Vision: Custom pricing |

| Speech-to-Text | Whisper: $0.006 per minute | Not Available | Not Available | Google Speech-to-Text: $0.012 per minute |

| Text-to-Speech | Not Available | Not Available | Not Available | Not Available |

| Code Assistance | GitHub Copilot: $10/month | Not Available | Not Available | Gemini for Developers: $19/user/month |

| Vision Capabilities | Vision: $0.03/1K tokens | Claude 3 supports vision | Not Widely Available | Gemini Pro Vision Available |

12 Strategies to Optimize OpenAI Costs

1. Choose the Appropriate Model

Selecting the right AI model is crucial for cost-effective usage. GPT-3.5-Turbo is ideal for straightforward tasks like basic classification and simple content generation, offering a more economical option. In contrast, GPT-4o should be reserved for complex reasoning, advanced coding challenges, and specialized tasks that require deeper cognitive processing. For repetitive, domain-specific tasks, consider investing in fine-tuning a model to create a more specialized and potentially more cost-efficient solution.

2. Optimize Token Usage

Efficient token management is key to controlling costs. Craft prompts that are concise and highly specific, minimizing unnecessary input tokens. Strategically use system messages to maintain context without expanding token count. Implement client-side caching for repeated requests to avoid redundant API calls. When designing multi-turn conversations, structure them thoughtfully to maximize context while minimizing token consumption.

3. Implement Request Batching

Batching similar requests can significantly reduce connection overhead and improve overall throughput. This approach is particularly effective for processing embeddings and classification tasks. By consolidating multiple requests into a single API call, you can achieve more efficient resource utilization and potentially lower overall costs.

4. Set Up Monitoring and Alerts

Develop a comprehensive monitoring strategy to track API usage effectively. Create detailed usage dashboards that provide real-time insights into consumption patterns. Establish spending alerts and implement rate limiting to prevent unexpected cost overruns. Conduct weekly reviews of usage patterns to identify optimization opportunities and potential inefficiencies. Consider implementing automatic cutoffs to prevent unexpected usage spikes from generating excessive costs.

5. Leverage Temperature Settings

Temperature settings play a crucial role in output generation and cost optimization. Lower temperature settings (between 0.0 and 0.3) produce more deterministic and focused outputs. This approach can reduce the number of API calls needed to achieve the desired result, as the model generates more consistent and predictable responses.

6. Use Compression Techniques

For processing long-form content within token limitations, implement advanced compression techniques. Recursive summarization is an effective method for breaking down large documents into manageable chunks, allowing you to process extensive content without exceeding token limits or incurring additional costs.

7. Hybrid Approach with Open-Source Models

Develop a tiered AI approach that leverages both open-source and proprietary models. Use open-source models for initial processing and filtering tasks, reserving OpenAI’s more powerful models for high-value operations requiring advanced capabilities. This strategy can provide a cost-effective balance between performance and expense.

8. Optimize Image Generation

When using DALL·E for image generation, focus on creating precise and detailed prompts to minimize the number of iterations required. Select appropriate resolutions based on your specific needs, avoiding unnecessary computational overhead. Consider batch generation for related concepts to maximize efficiency.

9. Leverage Enterprise Discounts

For organizations with high-volume API usage, explore enterprise agreements that offer volume-based pricing commitments. These negotiated contracts can provide significant cost savings compared to standard pricing tiers.

10. Continuous Optimization

Remember that cost optimization is an ongoing process. Regularly review and adjust your AI strategy, staying informed about new models, pricing changes, and emerging optimization techniques.

11. Implement Intelligent Caching Strategies

Caching is a powerful technique for reducing API costs and improving response times. Implement a robust caching mechanism that stores and reuses API responses for identical or similar queries. This approach is particularly effective for repetitive tasks, static content generation, and scenarios with frequent, similar requests. OpenAI states that you can save 50% with the Batch API.

Consider these caching strategies:

- Implement server-side or client-side caching systems that store API responses

- Use key-based caching with careful consideration of:

- Input parameters

- Model used

- Temperature settings

- Timestamp for cache invalidation

For dynamic content, implement time-based or conditional caching. For instance, cache responses for relatively static queries like code explanations, general knowledge questions, or formatting tasks. Use shorter cache periods for time-sensitive or rapidly changing content.

When designing a caching strategy, consider:

- Cache key generation: Create unique keys that capture all relevant query parameters

- Cache duration: Balance between data freshness and cost savings

- Cache storage: Use in-memory caches like Redis or distributed caching systems

- Fallback mechanisms: Ensure smooth API calls when cached data is unavailable

Example pseudo-code for a simple caching approach:

def get_ai_response(query, model, temperature):

cache_key = generate_cache_key(query, model, temperature)

# Check if response is in cache

cached_response = cache.get(cache_key)

if cached_response:

return cached_response

# If not in cache, make API call

response = openai_api_call(query, model, temperature)

# Store in cache with expiration

cache.set(cache_key, response, expiration=3600) # Cache for 1 hour

return response

By implementing intelligent caching, you can significantly reduce API costs, minimize latency, and optimize your overall AI integration strategy.

12. Compare OpenAI to alternatives

Staying competitive in AI implementation requires ongoing assessment of alternative language models and their pricing structures. While OpenAI has been a market leader, emerging providers like Anthropic, Mistral, and Google’s Gemini are offering increasingly compelling alternatives that can potentially reduce costs and provide unique capabilities.

- Anthropic API pricing page: https://www.anthropic.com/pricing#anthropic-api

- Mistral AI : https://mistral.ai/products/la-plateforme#pricing

- Gemini: https://ai.google.dev/gemini-api/docs/pricing

Conclusion: Maximizing Your AI Investment

OpenAI’s diverse product lineup offers powerful capabilities across conversation, code generation, image creation, and audio processing. By understanding the nuanced pricing structures and implementing the optimization strategies outlined in this guide, organizations and individuals can maximize the value derived from these advanced AI tools.

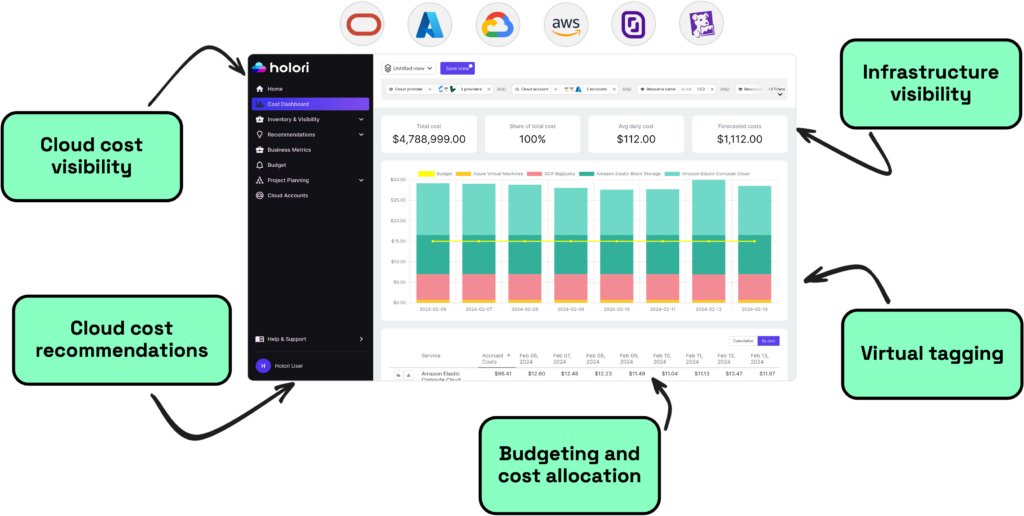

For businesses building AI-powered applications, careful planning of API usage patterns and regular monitoring in a FinOps platform like Holori can lead to significant cost savings without sacrificing performance. Meanwhile, individual users can select the most appropriate subscription tier based on their specific needs.

As the AI landscape continues to evolve rapidly, staying informed about pricing updates, new features, and emerging best practices remains crucial for optimizing your AI investments. By applying these strategies, you can ensure that OpenAI’s powerful capabilities enhance your workflow without unnecessary expenses.

Soon, Holori will integrate OpenAI and Anthropic in its FinOps platform. In the meantime, if you want to track your cloud costs, you are at the right place : https://app.holori.com/