Amazon Web Services (AWS) Aurora is a fully managed, MySQL and PostgreSQL-compatible relational database that is designed for high performance and scalability. Aurora is a specialized database engine within AWS RDS (Relational Database Service), which is Amazon’s managed service for databases. AWS RDS supports various database engines, including MySQL, PostgreSQL, MariaDB, SQL Server, and Oracle. Aurora aims to provide the availability and reliability of commercial databases at a fraction of the cost, offering enhancements in performance and features compared to standard MySQL and PostgreSQL. However, while Aurora offers a wealth of features, it can also present challenges when it comes to managing costs. This article will delve into AWS Aurora’s pricing structure, highlighting key aspects, and provide actionable strategies to optimize your spending.

What is AWS Aurora?

AWS Aurora is a cloud-based relational database that combines the advantages of enterprise-grade databases with the cost-efficiency of open-source alternatives. As a subsegment of AWS RDS, Aurora benefits from the security, monitoring, and management capabilities of the AWS ecosystem while providing enhancements for scalability and performance. Aurora automatically handles tasks like database provisioning, patching, backup, recovery, and failover, allowing developers to focus on building applications.

Aurora offers several products, including:

- Amazon Aurora MySQL-Compatible Edition: Designed for applications using MySQL, it provides high performance and availability.

- Amazon Aurora PostgreSQL-Compatible Edition: Tailored for PostgreSQL users, it supports many PostgreSQL features while enhancing performance and scalability.

- Amazon Aurora Serverless: A serverless option that automatically adjusts capacity based on demand, allowing users to pay only for what they consume.

- Aurora Global Database: Enables cross-region replication for applications that require low-latency global access, making it ideal for disaster recovery and multi-region deployments.

- Aurora I/O-Optimized: Designed for applications with high input/output operations, this configuration minimizes latency and maximizes throughput, making it suitable for workloads that require fast access to large datasets.

With features like serverless options, read replicas, multi-AZ deployments, and automatic scaling, Aurora provides flexibility and power, making it a popular choice for modern cloud-native applications.

AWS RDS vs. Aurora: Key Differences

- Performance: RDS supports multiple database engines with reliable performance, while Aurora is optimized for higher throughput—up to five times faster for MySQL and three times for PostgreSQL.

- Scalability: RDS requires manual scaling, whereas Aurora supports auto-scaling, including storage that grows automatically up to 128 TB.

- Availability: RDS offers multi-AZ setups for durability, but Aurora is built for high availability, with automatic replication across three availability zones.

- Cost: RDS is more budget-friendly, suitable for smaller workloads. Aurora is pricier but offers better performance and cost-efficiency for enterprise-level needs.

- Compatibility: RDS supports a wider range of databases. Aurora is limited to MySQL and PostgreSQL but includes tools for easy migration.

- Use Cases: Choose RDS for small to medium databases; Aurora suits high-performance, large-scale applications.

AWS Aurora Pricing Breakdown

Aurora pricing is complex, involving several factors that influence the overall cost. Below, we’ll break down the key pricing components:

1. Instance Pricing

AWS Aurora charges for the compute instances that you deploy, measured in terms of Amazon EC2 instances. These instances are categorized by families (e.g., db.r6g.large, db.t4g.medium) and have different prices based on their specifications. Compute costs are typically billed on an hourly basis.

- General Purpose Instances (e.g.,

db.r5): Ideal for workloads requiring a balance of compute, memory, and networking. - Memory-Optimized Instances (e.g.,

db.r6g): Best for applications that need high performance for in-memory databases. - Burstable Instances (e.g.,

db.t3): Suitable for workloads with lower and intermittent requirements.

| Standard Instances – Current Generation | Aurora Standard (Price Per Hour) | Aurora I/O-Optimized (Price Per Hour) |

|---|---|---|

| db.t4g.medium | $0.073 | $0.095 |

| db.t4g.large | $0.146 | $0.19 |

| db.t3.small | $0.041 | $0.053 |

| db.t3.medium | $0.082 | $0.107 |

| db.t3.large | $0.164 | $0.213 |

2. Aurora Storage pricing

AWS Aurora’s storage is billed separately based on the amount of data stored in your database. Aurora automatically scales storage capacity up to 128 TB as your database grows, charging per GB-month.

Key aspects of Aurora storage costs include:

- Data Storage: Billed per GB used per month.

- Backup Storage: Costs for storing automated backups and snapshots are free up to the size of your Aurora database.

- I/O Operations: Aurora charges for input/output requests made to the storage layer, typically calculated per million requests.

| Component | Aurora Standard | Aurora I/O-Optimized |

|---|---|---|

| Storage Rate | $0.10 per GB-month | $0.225 per GB-month |

| I/O Rate | $0.20 per 1 million requests | Included |

3. Aurora Serverless Pricing

For unpredictable or variable workloads, Aurora Serverless provides a cost-effective option. Instead of deploying fixed instances, Aurora Serverless scales automatically, adjusting capacity based on application demand.

- Aurora Capacity Unit (ACU): Serverless pricing is based on the number of ACUs used per second. An ACU is a unit of database capacity that combines both memory and processing power.

| Measure | Aurora Standard (per ACU hour) | Aurora I/O-Optimized (per ACU hour) |

|---|---|---|

| Aurora Capacity Unit | $0.12 | $0.16 |

4. Data Transfer Costs

Data transfer is an often-overlooked aspect of Aurora pricing. Charges may apply when data is transferred:

- Between AWS Regions.

- From Aurora to external locations.

- Cross-AZ (Availability Zone) traffic: In a multi-AZ setup, cross-AZ traffic incurs additional charges.

Cost Optimization Strategies for AWS Aurora

Now that we’ve outlined the core components of AWS Aurora pricing, let’s explore actionable strategies to optimize your Aurora costs.

1. Choose the Right Instance Type

Choosing the appropriate instance type is crucial for controlling costs. Here are a few tips:

- Start with Burstable Instances (e.g.,

db.t3): If you’re unsure about your workload’s requirements, using a burstable instance can keep costs low, especially for development and testing environments. - Leverage Instance Sizing Tools: Use AWS cost explorer or FinOps tools like Holori to analyze past usage and help you right-size your instances.

- Use Memory-Optimized Instances Wisely: Reserve memory-optimized instances for heavy-duty production workloads that genuinely require the increased memory capacity.

2. Use Aurora Serverless for Variable Workloads

Aurora Serverless can save costs for applications with fluctuating demand. Instead of paying for constant compute capacity, Serverless allows you to scale up and down automatically:

- Enable Auto Scaling: Make use of Aurora Serverless v2, which provides faster scaling and more granularity, charging only for the capacity you use.

- Consider Warm Pooling: To reduce latency when scaling Aurora Serverless, keep a warm pool of capacity that reduces cold start times without significantly increasing costs.

3. Leverage Aurora Read Replicas

Aurora supports up to 15 read replicas, which can help balance read-heavy workloads across multiple instances:

- Optimize Read Operations: Use read replicas to offload heavy read queries from the primary instance. This can reduce the size requirement for the primary instance, lowering overall costs.

- Global Databases: If you have a multi-region architecture, consider Aurora Global Databases. Although they have additional costs, they can reduce latency and improve user experience by routing requests to the nearest region.

4. Use Reserved Instances for Long-Term Workloads

If you have predictable workloads, consider using Reserved Instances (RIs). RIs can offer up to 75% savings over On-Demand pricing.

- Standard RIs: Ideal for steady-state workloads, they offer the best discounts.

- Convertible RIs: These allow flexibility to change instance families and sizes, suitable for evolving workloads.

5. Implement Storage Optimization

Aurora automatically scales storage, which can lead to hidden costs if not managed carefully:

- Purge Unnecessary Data: Regularly clean up old logs, temp tables, and backups that are no longer needed.

- Use Compression: Enable data compression for backup storage to reduce the footprint and costs.

- Monitor Storage I/O: Use Amazon CloudWatch to track I/O requests and identify inefficient queries that could lead to excessive costs.

6. Take Advantage of Free Tier and Promotional Credits

AWS offers a Free Tier for new users, allowing you to explore Aurora at no cost for a limited period. AWS occasionally provides promotional credits that can be used to offset costs:

- Use Free Tier for Development: Deploy development environments on Free Tier instances or utilize promotional credits to explore Aurora’s features.

- Consider Credits for Migration: AWS often offers credits for migrating workloads from on-premises databases to Aurora. Take advantage of these offers to reduce initial migration costs.

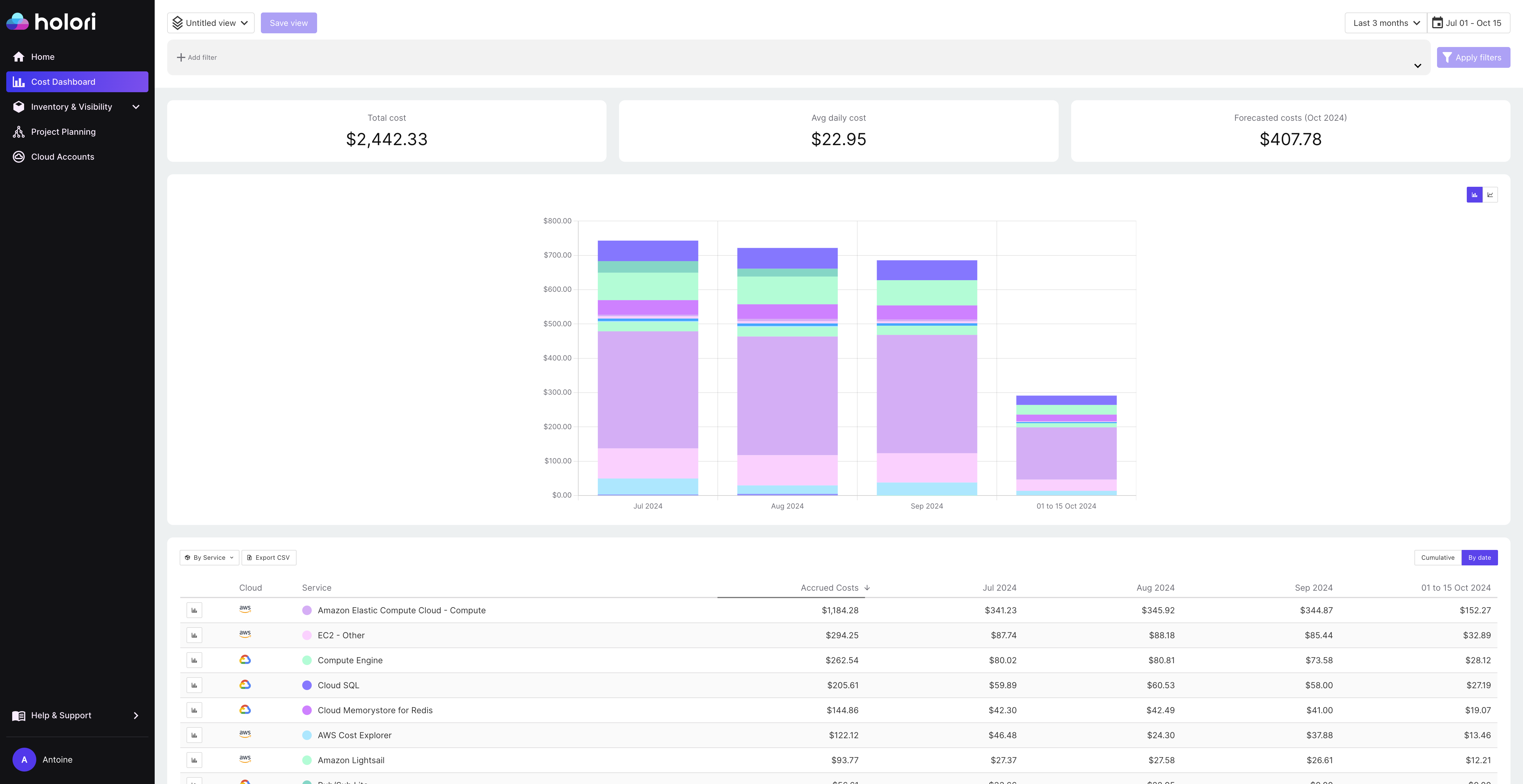

How Holori can help visualize and Optimize your Aurora costs

Managing and optimizing cloud costs can be complex, but Holori simplifies the process with its comprehensive FinOps platform. Holori’s cost dashboards provide an intuitive interface to visualize cloud usage trends, identify key cost drivers, and gain a clear overview of spending. Users can easily track both historical and forecasted cloud costs, enabling proactive cost management. Additionally, Holori allows you to set custom budgets and receive real-time alerts when spending approaches or exceeds predefined limits, ensuring you stay within budget and avoid unexpected costs. Moreover, Holori also offers actionable recommendations, highlighting underutilized resources and potential savings to optimize costs while maintaining performance.

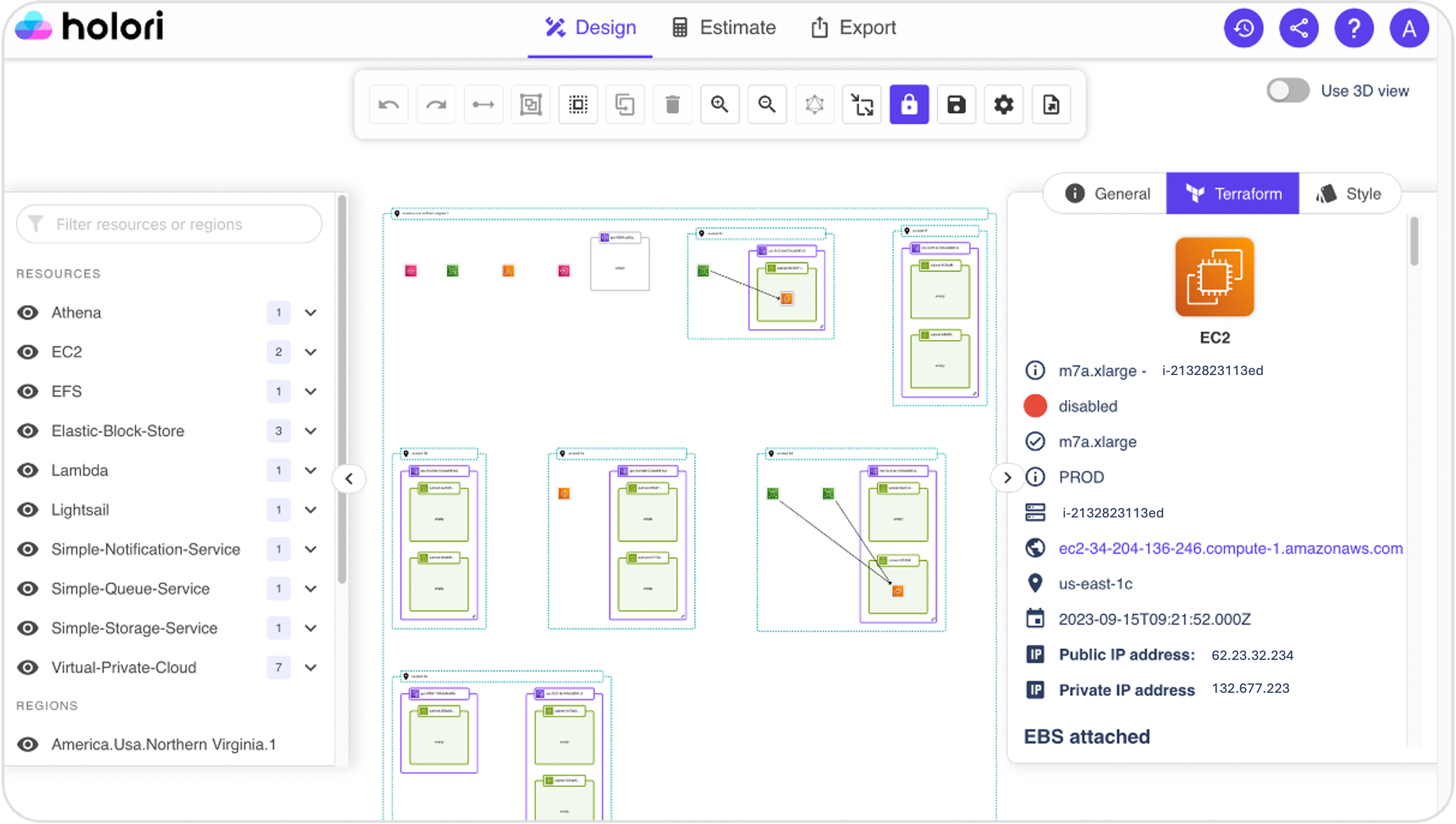

Holori automatically generates infrastructure diagrams that make it easy to visualize the configuration of your Aurora deployment. These diagrams allow you to inspect the architecture, monitor key resources, and ensure your database setup is aligned with best practices. This visualization helps identify inefficiencies, optimize configurations, and manage Aurora deployments effectively, all within a user-friendly interface.

Case Study: Nintendo and DeNA Achieve Performance and Cost Efficiency with AWS Aurora

Nintendo and DeNA, a Japanese mobile game developer, partnered to launch Nintendo’s mobile gaming service using AWS Aurora. The goal was to build a scalable and reliable database infrastructure to handle the unpredictable and global demand for mobile games. They needed a solution that would provide high availability, performance, and cost efficiency.

Challenges

Nintendo and DeNA required a database capable of managing significant fluctuations in traffic, especially during game launches. Traditional databases would have involved costly and complex configurations to handle spikes, and the companies wanted a solution that would allow them to scale easily while optimizing operational costs.

Solution

By choosing Amazon Aurora, Nintendo and DeNA were able to implement a database solution that met their performance and scalability needs:

- Aurora’s Automated Scaling allowed them to adjust capacity in real-time based on player demand, reducing the need for manual intervention.

- With multi-AZ replication, they achieved high availability and durability, crucial for maintaining a seamless gaming experience globally.

- The ability to manage read replicas enabled them to efficiently handle read-heavy workloads without compromising performance.

Results

The switch to Aurora allowed Nintendo and DeNA to achieve:

- Cost Savings by eliminating the need to over-provision infrastructure for peak loads. Aurora’s pay-as-you-go model ensured they paid only for what they used.

- Improved Scalability with Aurora’s serverless options, allowing the database to automatically scale with demand spikes, especially during game launches.

- High Reliability and Performance, maintaining low latency and a seamless experience for millions of global users.

In summary, AWS Aurora helped Nintendo and DeNA to launch and maintain a robust mobile gaming service that delivered exceptional performance while keeping costs manageable, proving Aurora’s capabilities as a powerful and cost-effective database solution for large-scale applications.

Conclusion: Mastering AWS Aurora Pricing and cost optimization

AWS Aurora is a powerful and scalable database solution, but without careful management, costs can quickly escalate. By understanding the nuances of Aurora pricing and implementing best practices like right-sizing instances, using Aurora Serverless, leveraging reserved instances, and optimizing storage, you can significantly reduce your database expenses. Regularly monitor your costs with AWS tools or FinOps platform such as Holori, stay informed about updates to Aurora features, and continuously refine your database strategy to maintain cost-efficiency.

With a mindful approach to AWS Aurora, you can achieve the perfect balance between performance, scalability, and affordability, ensuring your cloud infrastructure remains both effective and economical.

Visualize and optimize your AWS costs with Holori (14 days trial) : https://app.holori.com