“BigQuery” has become synonymous with Google Cloud’s big data analytics, much like “S3” for AWS storage or “Cosmos DB” for Azure’s global databases. Known for handling petabytes of data with minimal infrastructure management, BigQuery provides fast, scalable analytics without requiring extensive data warehousing expertise. As the use of data, AI, and machine learning expands rapidly, the importance—and cost—of services like BigQuery has risen as well.

In this guide, we’ll break down the GCP BigQuery pricing structure and offer practical tips to help you optimize costs while maximizing BigQuery’s potential for your business.

What is BigQuery?

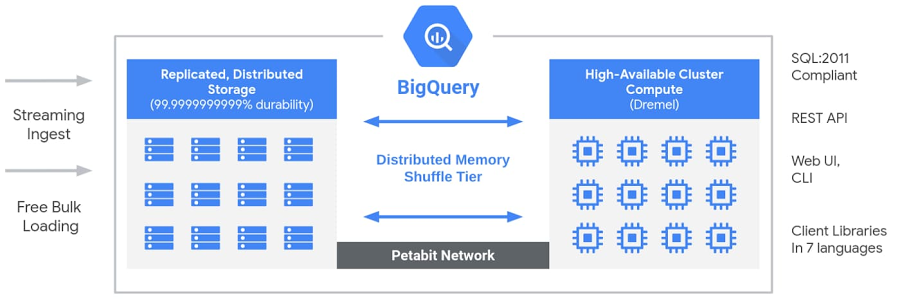

BigQuery is a fully managed, serverless data warehouse service by Google Cloud Platform (GCP) that enables efficient storage, processing, and querying of vast datasets. Designed to handle everything from basic data storage to complex analytics workloads, BigQuery removes the need for infrastructure management, automatically scaling resources to meet data demands as they grow.

BigQuery’s serverless architecture means that Google takes care of all infrastructure management, allowing users to focus on data analysis rather than configuring or maintaining servers. This automatic scaling is particularly advantageous for organizations with fluctuating or growing data needs.

In addition to batch processing, BigQuery supports real-time analytics and streaming, enabling instant insights from data as it’s ingested—a key feature for time-sensitive applications, such as tracking customer behavior or monitoring dynamic metrics. BigQuery also includes BigQuery ML, a built-in feature for developing machine learning models directly within the platform, allowing data teams to perform predictive analytics without needing advanced machine learning expertise.

BigQuery integrates seamlessly with other services within the GCP ecosystem, enhancing its capabilities for end-to-end data solutions. It works well with Google Data Studio for reporting, Dataflow for real-time data processing, and Cloud Pub/Sub for data ingestion, among other GCP services. This interoperability makes it easy to build comprehensive data pipelines and applications, leveraging the power of GCP’s tools to manage, analyze, and visualize data all in one ecosystem.

Overview of GCP BigQuery pricing

As you can imagine, for data and ML related services such as BigQuery, costs can grow exponentially depending on your usage. It’s therefore critical to master its components and understand their impact on the final pricing.

BigQuery charges users based on two primary components:

- Compute: the cost to process queries, including SQL queries, user-defined functions, scripts, and certain data manipulation language (DML).

- Storage: The cost of storing data loaded in BigQuery.

Moreover, a third components must be mentionned, BigQuery Additional Services.

These include BigQuery Omni, BigQuery ML, BI Engine, and data ingestion/extraction.

These variables have a major impact on your BigQuery cost at the end of the month so it’s essential that you master them.

GCP BigQuery compute pricing models

To run your queries, BigQuery offers two types of pricing models, on-demand pricing and capacity based pricing.

The table below explains the main differences.

| Feature | BigQuery On-Demand Pricing | BigQuery Capacity-Based (Flat-Rate) Pricing |

|---|---|---|

| Pricing Model | Pay-per-query | Subscription-based (reserved slots) |

| Billing Metric | Per TB of data processed in queries | Number of slots reserved (e.g., 100 slots, 500 slots) |

| Ideal For | Variable or unpredictable workloads | Predictable, high-volume workloads |

| Cost Predictability | Low (depends on query size and frequency) | High (fixed monthly or yearly cost) |

| Query Cost | $6.25 per TB processed (US East 1) | N/A, as query costs are included in flat-rate subscription |

| Capacity Flexibility | No pre-allocated capacity; scales with each query | Fixed capacity based on reserved slots |

| Concurrent Query Performance | May be limited if high-volume concurrent queries are run | Supports high concurrency based on slot availability |

| Discounts | Possible for high usage through committed usage plans | Discounts for yearly commitments (e.g., 20% for annual plans) |

| Best Use Case | Ad hoc analysis, exploratory queries | BI and data warehousing with consistent, heavy usage |

| Auto Scaling | Yes, auto-scales based on query demand | No auto-scaling; capacity must be manually adjusted |

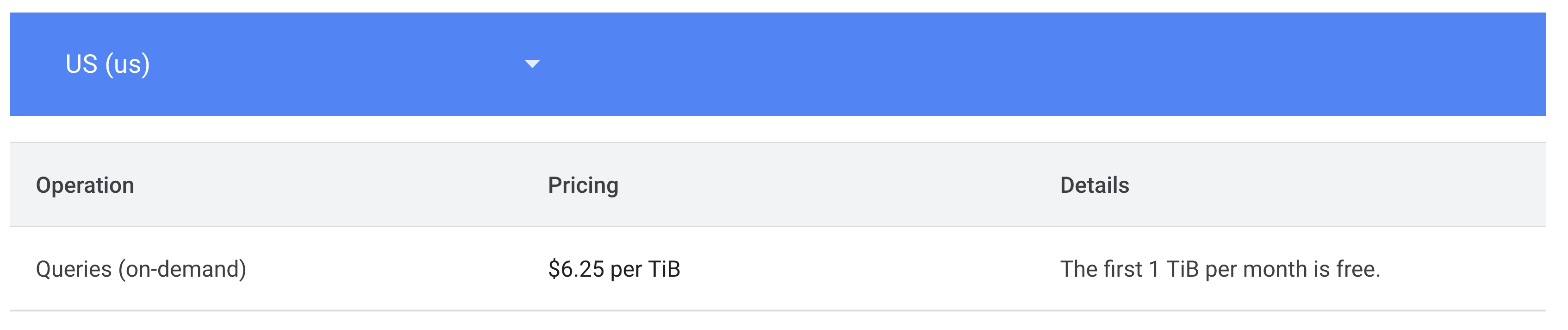

Focus on BigQuery On-Demand Compute pricing

With GCP BigQuery on-demand pricing model, you pay for the data scanned by your queries (by TiB). This is the model applied by default.

In general, you have access to up to 2,000 concurrent slots. These are shared among all queries in a single project. BigQuery can go beyond this limit for short periods of time in order to accelerate smaller queries. You must also be aware that GCP can also temporarily reduce the slots available in certain regions during peaks of demand.

There are several items to keep in mind when considering BigQuery’s cost on-deman pricing:

- Columnar data storage: The data structure used by BigQuery is columnar. How does this impact you? Here you’ll pay based on the total data processed in the columns you select, and the total data per column is calculated based on the types of data in the column

- Only queries ran against shared data is billed. The owner can access its data for free

- Queries returning an error won’t be charged

Example: If you pay $6.25 per TB of data processed per query, a 100 GB query costs $0.625.

Focus on BigQuery Capacity Compute pricing

For users who prefer predictable costs instead of on-demand, it is possible to get some peace of mind. For this, you can use capacity-based analysis pricing model.

Processing capacity in BigQuery is measured in slots. Slots represent virtual CPUs that are used to query data. In general, access to more slots lets you run more concurrent queries, and complex queries run faster. The capacity-based pricing model lets you reserve a volume of slots.

There are multiple advantages when using capacity-based pricing:

- Cost: If you have a consistent use of BigQuery benefit from important discount. Keep on-demand for tasks that are unscheduled.

- Predictability: Eliminate the surprise of the end-of-month bill arriving. Capacity-based slots allow for more consistent monthly fees.

- Centralized purchasing: Manage purchase at a company level rather than at a project level.

- Flexibility. You can choose how much capacity to allocate to a workload or let BigQuery automatically scale capacity based on your workload requirements. You are billed in per-second increments with a minimum usage period of one minute.

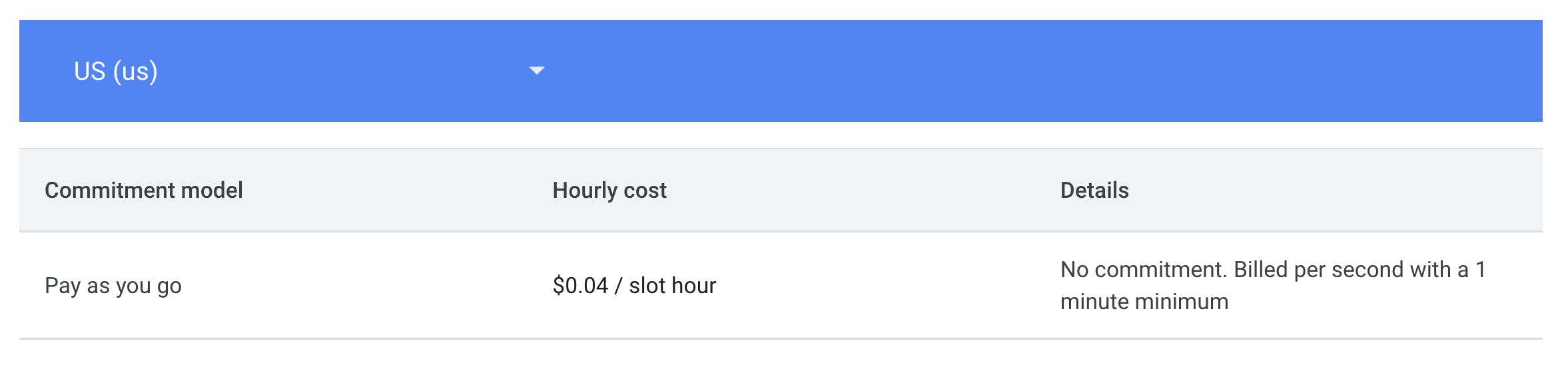

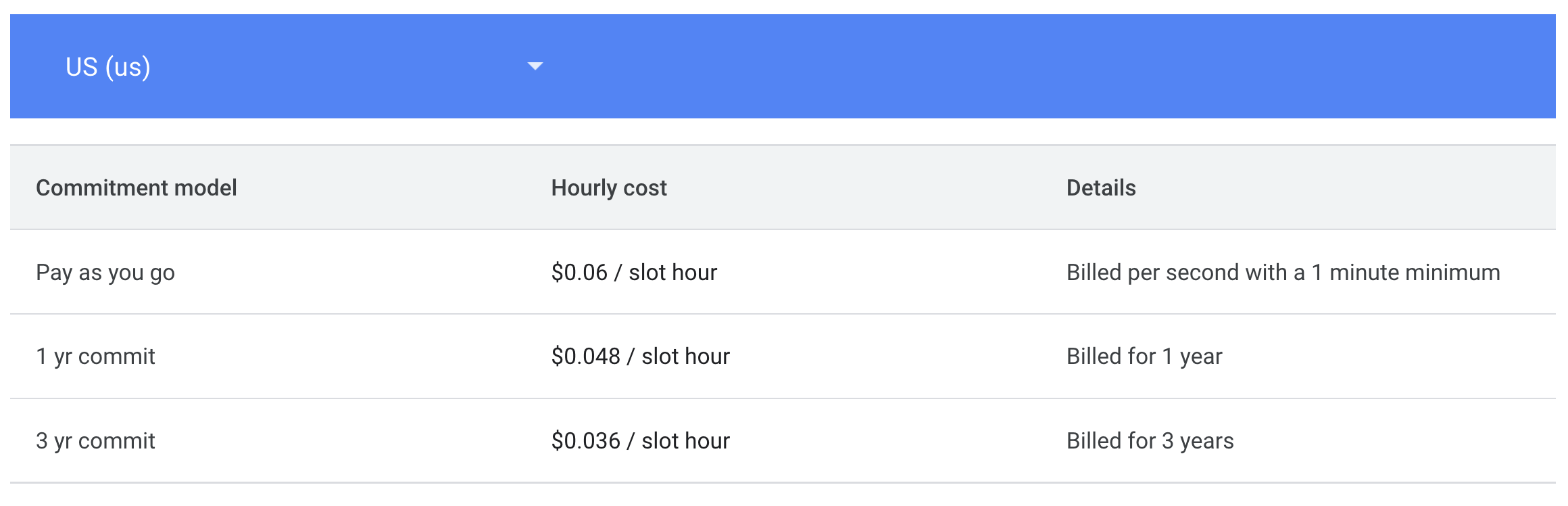

Here again you have the possibility to choose from two main options. Standard Edition that lets you pay as you go and is ideal for dev or testing workloads. Enterprise Edition that is best for prod workloads and performs better. For the later you can also opt for a 1 or 3 year commitment which helps keeping costs low.

Standard Edition pricing

Enterprise Edition pricing

Example: If you pay a fixed $10,000/month for 500 slots, each slot costs you $20.

GCP BigQuery Storage Pricing

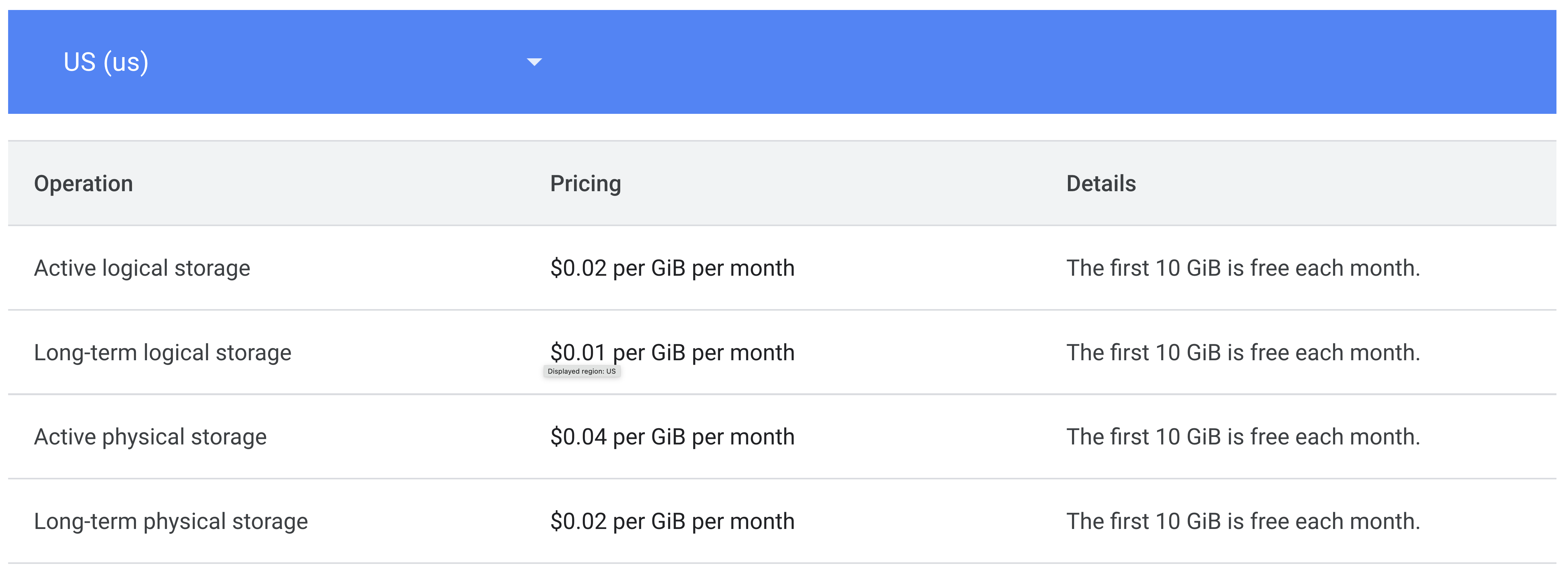

Storing data in BigQuery isn’t free. That’s of course not a surprise, but did you know that there are two storage types?

- Active Storage: For tables or table partition that has been modified over the past 90 days.

- Long-term Storage: For tables or table partition that hasn’t been modified in the past 90 days. It’s important to note here that performance, availability or durability remains equal to the active storage one. However, the cost is divided by two.

The good news is that the first 10 GiB of storage per month is free!

Additional factors influencing BigQuery pricing

Data Transfer price for BigQuery

We know you hate them, we also do, but GCP Data Transfer prices can negatively impact your cloud costs.

Most BigQuery data transfer costs are governed by the various allowances based on the service used. It would be too long to summarize them all here. To have a precise and up to date information about these quotas please visit this page: https://cloud.google.com/bigquery/quotas#load_jobs

Then, once data is transferred to BigQuery, standard BigQuery storage and query pricing applies. The extraction, uploading to a Cloud Storage bucket, or loading data into BigQuery is free.

And finally, be aware that costs can be incurred outside of Google Cloud when using the BigQuery Data Transfer Service, such as AWS or Azure data transfer charges.

BigQuery Omni pricing

BigQuery Omni is Google Cloud’s multi-cloud analytics solution. It allows users to analyze data stored across various cloud environments such as GCP, AWS or Azure without requiring data movement or duplication. It extends BigQuery’s capabilities, enabling users to perform queries and manage data across platforms through a single interface.

Similar to BigQuery on-demand model, Omni’s queries are billed on-demand per TiB of data scanned.

For example for AWS in Northern Virginia costs $7.82 per Tib.

There are also costs that apply for data transfer to and from other providers as discussed in the previous section.

Data ingestion & extraction pricing

With BigQuery, data ingestion can either be made through batch loading or through streaming. The ingestion is free for batch loading, and you must pay for streaming insets or storage write made with the API (however, the first 2Tib per month are free).

When it comes to extraction, there are 3 possibilities:

- Batch export: Free up to 50Tib per day, then prices vary depending on the origin and destination region of the transfer.

- Export of query results: You are billed for processing the query statement using the on-demand or capacity based model.

- Streaming reads: You are billed for the amount of data read.

Data Replication pricing

BigQuery offer 3 types of data replication.

- Cross region copy: one time

- Cross region replication: ongoing

- Cross region turbo replication: ongoing & high-performance

For each of them, you are charged for data transfer for the volume of data replicated.

To get a clear idea of the up to data pricing you must check with your scenario on GCP’s console or pricing grids. Replicating within the same region if often free.

Big Query ML pricing

BigQuery ML pricing is structured around the stages of the machine learning workflow, which includes model training, evaluation, and prediction, along with associated data storage and querying costs in BigQuery.

When creating models, the cost is calculated based on the amount of data processed during training. Different types of models come with different pricing structures. For example, simpler models like linear regression or logistic regression are charged at a rate of $5 per terabyte of data processed, whereas more complex models, such as boosted trees or deep neural networks, typically have higher associated costs due to their increased computational demands. Google also provides a free tier for model training, allowing up to 10 gigabytes of data processed per month without cost.

After training, if you decide to evaluate your model, there is an additional charge based on data usage, as evaluation involves processing data to assess model accuracy and performance. These costs depend on the model’s complexity and the volume of data evaluated.

For making predictions, BigQuery ML also charges based on the amount of data processed, as each prediction runs through the model with new data input. Prediction costs are generally lower than training, as they require fewer computational resources.

Lastly, BigQuery storage and query costs apply for any data that resides within BigQuery or is queried as part of the ML process. These charges are separate from the ML-specific costs and follow standard BigQuery storage and querying fees.

Best practices to optimize BigQuery costs

Maximize the Free Operations in BigQuery

The first and best to save money is to not spend any at all in the first place! Most cloud providers offer free tiers for their products, tis the case for GCP BigQuery. BigQuery’s free tier helps users start with data analytics and machine learning at no cost, offering monthly usage limits for storage, querying, and machine learning without incurring charges if they stay within the specified limits.

The free tier provides 10 GB of storage monthly for data in tables, views, and materialized views, plus 1 TB of free query processing monthly, covering standard SQL and ML training queries. This enables exploration, analytics, and basic ML model training at no cost. BigQuery ML extends this with 10 GB for model training and 1 GB for predictions per month, supporting models like linear and logistic regression. Additional free operations include data ingestion from Google Cloud Storage, metadata actions like listing datasets or viewing tables, and more, allowing users to perform many foundational tasks free of charge. By leveraging these allowances, you can make the most of BigQuery’s powerful capabilities while keeping costs low.

Holori’s 10 tips for optimizing BigQuery’s cost:

- Optimize Queries: Only select needed columns, filter data early with WHERE clauses, and use partitioned and clustered tables to reduce data scanned.

- Leverage Caching: Use cached query results for repeat queries within 24 hours to avoid additional charges.

- Set Cost Controls: Use budgets and alerts to track and limit spending. Check query cost estimates before running complex queries.

- Shard Large Tables: For datasets with time-based data, split large tables by date to query only relevant subsets.

- Use Materialized Views: For frequently used queries, store precomputed results to save processing costs.

- Set Table Expiration Policies: Automatically delete old or unused tables to reduce storage costs.

- Consider Flat-Rate Pricing: For high, predictable usage, a flat-rate plan can be cheaper than per-query billing.

- Optimize Dashboards with BI Engine: Use BigQuery BI Engine for low-cost, in-memory caching on dashboards.

- Delete your data: Data is not automatically deleted from your Cloud Storage bucket after it is uploaded to BigQuery. Consider deleting it your Cloud Storage bucket to avoid additional storage costs

- Use Holori for Monitoring and Controlling BigQuery Costs

How Holori can help you optimize BigQuery costs

Holori is a powerful new generation FinOps software. Create a free account, connect your cloud providers and start visualizing your costs within minutes.

Centralize your cloud costs

Visualize your cloud costs from multiple providers and accounts in interactive dashboards. Use intelligent filters to gain new insights into your cloud costs. For example, discover how much you spent on BigQuery in the US last quarter and compare it to other regions.

Cost saving recommendations

It can be hard to understand which cost saving measures to implement. With Holori, you get actionnable cost optimization recommendations. We help you identify unused resources, over- or undersized elements or inadequate purchase plans. Indeed, we tell you exactly how much you would save by implementing our recommendations and even offer multiple options.

Visualize your infra from a new angle with automated diagrams

What’s the best way to simplify your complex thoughts when explaining it to someone? You put it down on paper with a simple diagrams. That’s exactly how Holori helps you understand your cloud infra. We automatically generate cloud infra diagrams. You can visualize your regions, VPCs, subnets, ressources in a graphical manner.

That’s sounds great right? Give it a try for free here.

Conclusion

BigQuery offers a flexible, powerful, and relatively straightforward pricing model that can fit various workloads, from small-scale exploratory analysis to massive, enterprise-level data processing. The key to controlling BigQuery costs lies in understanding storage and analysis charges, optimizing query efficiency, and choosing the right pricing model.

For organizations looking to leverage BigQuery effectively, Google’s data warehouse can be both economical and highly functional, enabling extensive data-driven insights without high, unpredictable costs. Using a third-party cost visualization tool such as Holori helps you gain new insights into your cloud costs. By controlling the resources load and implementing recommendations, it becomes possible to save thousands of dollars.

Visualize and optimize your GCP cloud spend now for free: https://app.holori.com/