Amazon SageMaker has become a cornerstone for businesses aiming to unlock the potential of artificial intelligence (AI) and machine learning (ML) without the burden of managing complex infrastructure. It offers a robust suite of tools designed to streamline the end-to-end ML lifecycle—from data preparation and model building to training, tuning, and deploying at scale. SageMaker’s integrated environment empowers data scientists and developers to focus on creating powerful AI models, leveraging advanced features like automated model tuning, built-in algorithms, and deep learning frameworks. However, as with any cloud service, understanding SageMaker’s pricing structure and how to optimize costs is essential for maximizing ROI. In this guide, we’ll explore SageMaker’s detailed pricing structure, identify the major cost drivers, and share effective strategies for cost optimization. A deep understanding of each SageMaker tool will help you get the most out of your AI and ML investments on AWS.

What is AWS SageMaker?

Amazon SageMaker is a comprehensive, fully managed machine learning (ML) service from AWS, designed to streamline the entire ML workflow for developers and data scientists. It enables users to build, train, and deploy ML models at scale, offering a broad suite of specialized tools to address each stage of the ML lifecycle—from data preparation to deployment. With SageMaker, you can transform raw data into actionable insights without the hassle of managing infrastructure, leveraging popular frameworks like TensorFlow, PyTorch, and Apache MXNet, as well as custom algorithms.

Each SageMaker product is tailored to different aspects of ML workflows and comes with its own pricing structure:

- SageMaker Studio: An integrated development environment (IDE) that unifies all your ML activities in one place. Charges depend on the instance type used and the duration it’s active. Additional costs may apply if using built-in tools like Data Wrangler or Autopilot.

- SageMaker Studio Notebooks: Pre-built, collaborative Jupyter notebooks that can be customized without server management. Costs are based on the compute instance type selected and the number of hours it’s running, plus any storage volumes attached to the notebooks.

- SageMaker Data Wrangler: Simplifies data import, transformation, and feature engineering through a visual interface. Billed based on the compute instance type, job duration, and storage for importing/exporting datasets.

- SageMaker Autopilot: Automates model training and tuning, providing transparency and customizability. Charges include the compute resources used during training, tuning, and data storage for generated datasets.

- SageMaker Canvas: A no-code solution for business analysts to create ML models with a drag-and-drop interface. Costs are based on application usage hours and underlying data processing.

- SageMaker Ground Truth: A data labeling tool that supports both manual and automated labeling. Pricing is based on the number of labeling tasks, with added costs for human labelers or automated labeling services.

- SageMaker JumpStart: Offers pre-built solutions and models for common ML use cases, enabling rapid deployment. Costs vary depending on the compute resources required and the complexity of the model.

- SageMaker Training: Dedicated to training ML models, supporting distributed training across high-performance compute instances. Pricing depends on the instance type, training duration, and additional features like hyperparameter tuning.

- SageMaker Model Registry: A central repository for tracking and managing trained models, with costs based on the number and size of models stored.

- SageMaker Pipelines: Facilitates CI/CD for ML with automated workflows for model building, testing, and deployment. Charges are linked to compute resources, storage, and data transfer needs during pipeline operations.

- SageMaker Clarify: Tools for bias detection and model explainability. Costs depend on the compute resources required for the analyses.

- SageMaker Feature Store: A managed repository for storing and retrieving features for ML models. Pricing includes storage costs and read/write operations.

- SageMaker Neo: Optimizes models for faster performance on edge devices. Charges depend on optimization tasks and target deployment hardware.

- SageMaker Inference Options: Provides flexible deployment solutions—such as Real-Time Inference, Batch Transform, Multi-Model Endpoints, and Serverless Inference. Costs are generally based on the compute instance type, prediction duration, and data transfer.

Understanding AWS SageMaker pricing in the ML pipeline and key cost drivers

AWS SageMaker offers a variety of services that can be broadly categorized into four main areas:

- Data Preparation & Feature Engineering

- Model Building (Notebook Instances)

- Model Training

- Model Deployment & Inference

1. Data Preparation

Data preparation often involves leveraging SageMaker Data Wrangler or processing data through SageMaker Processing Jobs. Costs here are generally tied to the instance type, size, and duration of the processing jobs. Additionally, costs for storing data in Amazon S3 or using managed databases like Amazon RDS may come into play.

2. Model Building (Notebook Instances)

SageMaker provides a variety of managed notebook instances that are preconfigured for ML. You pay for the type of instance you select (e.g., ml.t3.medium, ml.c5.xlarge) and the duration for which it’s running. Some costs to consider:

- Compute costs: Billed on an hourly basis based on the instance type.

- Storage costs: Charged for persistent storage volumes attached to the instance.

3. Model Training

Training a model is one of the most resource-intensive and costly aspects. SageMaker charges based on:

- Training instance type: More powerful instances like

ml.p3.16xlargewith GPUs will cost significantly more than CPU-based instances. - Duration: Billed per second, so longer training times increase costs.

- Hyperparameter tuning: If you use SageMaker’s Automatic Model Tuning, costs can escalate with the number of training jobs launched during the tuning process.

4. Model Deployment & Inference

For deploying and serving predictions, you have several options, including:

- Real-time inference endpoints: Instances dedicated to real-time predictions are billed by the hour.

- Batch Transform Jobs: Ideal for large datasets; charges are based on the instance type and duration.

- Serverless Inference: Newer option where you’re billed based on the number of requests and the amount of compute used per request.

- Multi-model endpoints: Allows hosting multiple models on the same endpoint, which can save costs.

Key Drivers that impact AWS SageMaker pricing

While the breakdown of services provides a general overview, there are specific elements within SageMaker that can quickly drive up costs if not monitored closely:

- Instance Types: High-performance instances, especially GPU-based ones, are expensive.

- Data Storage: Costs for storing training data, model artifacts, and notebook data in Amazon S3 can add up.

- Model Training Time: The longer the training job runs, the higher the costs.

- Endpoints: Real-time inference can be costly if the endpoint is always running, even during low traffic periods.

- Network Costs: Moving data between AWS services (e.g., S3 to SageMaker) incurs egress fees.

AWS SageMaker Pricing Models

AWS SageMaker offers a variety of pricing models to accommodate different usage needs and budget constraints:

SageMaker Free Tier

SageMaker is available to try for free as part of the AWS Free Tier, offering new users two months of free usage to explore its features without initial costs. Here’s what the free tier includes:SageMaker is available to try for free as part of the AWS Free Tier, offering new users two months of free usage to explore its features without initial costs. Here’s what the free tier includes:

- Studio Notebooks and Notebook Instances: 250 hours of

ml.t3.mediuminstance on Studio notebooks OR 250 hours ofml.t2.mediumorml.t3.mediuminstance on notebook instances per month for the first two months. - RStudio on SageMaker: 250 hours of

ml.t3.mediuminstance on RSession app AND a freeml.t3.mediuminstance for RStudioServerPro app per month for the first two months. - Data Wrangler: 25 hours of

ml.m5.4xlargeinstance per month for the first two months. - Feature Store: 10 million write units, 10 million read units, and 25 GB storage (standard online store) per month for the first two months.

- Training: 50 hours of

m4.xlargeorm5.xlargeinstances per month for the first two months. - SageMaker with TensorBoard: 300 hours of

ml.r5.largeinstance per month for the first two months. - Real-Time Inference: 125 hours of

m4.xlargeorm5.xlargeinstances per month for the first two months. - Serverless Inference: 150,000 seconds of on-demand inference duration per month for the first two months.

- Canvas: 160 hours/month for session time per month for the first two months.

- HyperPod: 50 hours of

m5.xlargeinstance per month for the first two months.

This comprehensive free tier lets users experiment with various SageMaker capabilities, making it ideal for learning and testing without financial commitment. For more updated information, you can check Amazon SageMaker Free Tier Pricing.

AWS SageMaker On-Demand Pricing

The on-demand pricing model charges users based on the resources they consume, with no upfront commitments. This flexibility allows organizations to scale their machine learning workloads according to their needs. On-Demand billing applies to multiple features including Studio Classic, JupyterLab, Code Editor, RStudio, and more. This model is well-suited for projects with unpredictable workloads that require flexibility.

AWS SageMaker Savings Plan

The SageMaker Savings Plan offers up to 64% in cost savings for organizations that commit to a consistent usage level over a one- or three-year term. Key features include:

- Flexible Commitment: Choose between one- or three-year terms, with longer commitments offering greater savings.

- Automatic Discounts: Discounts apply automatically to eligible SageMaker services, including Studio, Data Wrangler, Training, and Inference, across all instance types, sizes, and regions.

- Payment Options: Opt for no upfront, partial, or full upfront payments to maximize discounts.

- Broad Coverage: Applies to compute instances and data processing, offering comprehensive cost savings.

- Predictable Costs: Simplifies budgeting by providing consistent and reduced rates, helping to manage expenses effectively.

Savings Plan are ideal for organizations with steady ML workloads, offering both flexibility and cost efficiency.

To see Savings Plans calculator for AWS, with all the options for all Sagemaker products, check here.

Cost Optimization Strategies for AWS SageMaker

Cost optimization in AWS SageMaker requires a strategic approach, balancing performance with price. Here are some effective strategies:

1. Choose the Right Instance Types

Choosing the right instance type is critical for cost control. Here’s how you can optimize:

- Start Small: Begin with smaller instance types (

ml.t3.medium) for development and testing. Scale up to larger types only when necessary. - Leverage Spot Instances: Use spot instances for training jobs. These offer up to 70-90% savings compared to On-Demand instances but come with the risk of interruptions.

- Use Savings Plans: If you have predictable usage patterns, consider committing to a Compute Savings Plan to get discounts on instance costs.

Check the Sagemaker instance prices here. Prices vary by region and product. Below is just an extract.

| Standard Instances | vCPU | Memory | Price per Hour |

|---|---|---|---|

| ml.t3.medium | 2 | 4 GiB | $0.05 |

| ml.t3.large | 2 | 8 GiB | $0.10 |

| ml.t3.xlarge | 4 | 16 GiB | $0.20 |

| ml.t3.2xlarge | 8 | 32 GiB | $0.399 |

| ml.m5.large | 2 | 8 GiB | $0.115 |

| ml.m5.xlarge | 4 | 16 GiB | $0.23 |

| ml.m5.2xlarge | 8 | 32 GiB | $0.461 |

| ml.m5.4xlarge | 16 | 64 GiB | $0.922 |

| ml.m5.8xlarge | 32 | 128 GiB | $1.843 |

| ml.m5.12xlarge | 48 | 192 GiB | $2.765 |

| ml.m5.16xlarge | 64 | 256 GiB | $3.686 |

| ml.m5.24xlarge | 96 | 384 GiB | $5.53 |

| ml.m5d.large | 2 | 8 GiB | $0.136 |

| ml.m5d.xlarge | 4 | 16 GiB | $0.271 |

| ml.m5d.2xlarge | 8 | 32 GiB | $0.542 |

| ml.m5d.4xlarge | 16 | 64 GiB | $1.085 |

| ml.m5d.8xlarge | 32 | 128 GiB | $2.17 |

| ml.m5d.12xlarge | 48 | 192 GiB | $3.254 |

| ml.m5d.16xlarge | 64 | 256 GiB | $4.339 |

| ml.m5d.24xlarge | 96 | 384 GiB | $6.509 |

2. Optimize Storage Costs

Storage costs can quickly add up if not managed properly. Consider the following:

- S3 Storage Classes: Store data in cost-effective S3 storage classes, like S3 Standard-Infrequent Access or S3 Glacier, for data that you don’t need frequently.

- Clean Up Unused Data: Regularly review and delete old SageMaker artifacts, training data, and endpoints that are no longer in use.

- Use Lifecycle Policies: Set up S3 lifecycle policies to automatically move or delete data based on predefined rules.

3. Reduce Model Training Time

Training is one of the biggest cost drivers in SageMaker. Here’s how to keep it under control:

- Use Managed Spot Training: SageMaker allows you to use spot instances for training jobs. This can drastically reduce costs if you can tolerate occasional interruptions.

- Early Stopping: Enable early stopping to halt training if progress stagnates, saving both time and money.

- Optimize Algorithms: Use SageMaker’s pre-built, optimized algorithms instead of custom scripts. These are tuned to run efficiently on AWS infrastructure.

- Batch Training: If possible, train your model in batches to minimize the time required for a single training run.

4. Optimize Inference Costs

Deploying models for inference can become costly if not managed well. To optimize:

- Auto Scaling: Use SageMaker’s auto-scaling capabilities to scale your endpoints up and down based on traffic. This avoids paying for unused capacity during off-peak times.

- Batch Transform Instead of Real-Time Endpoints: For non-time-sensitive predictions, use Batch Transform Jobs, which are often cheaper than always-on endpoints.

- Serverless Inference: If traffic is unpredictable, consider using SageMaker’s serverless inference, where you only pay per request.

- Multi-Model Endpoints: Host multiple models on a single endpoint, reducing the number of active instances required.

5. Consolidate Training Jobs with Hyperparameter Tuning

While hyperparameter tuning can increase costs due to multiple training runs, it can be optimized:

- Bayesian Optimization: Use SageMaker’s built-in Bayesian Optimization for efficient hyperparameter tuning, which reduces the number of training runs needed to find optimal parameters.

- Warm Start: Leverage warm-start tuning to reuse results from previous runs, saving time and resources.

6. Leverage Savings plans for Consistent Workloads

If you have a consistent ML workload, consider reserving capacity:

- Compute Savings Plans: These provide flexibility across instance types and regions, offering savings for a commitment to a specific amount of compute usage.

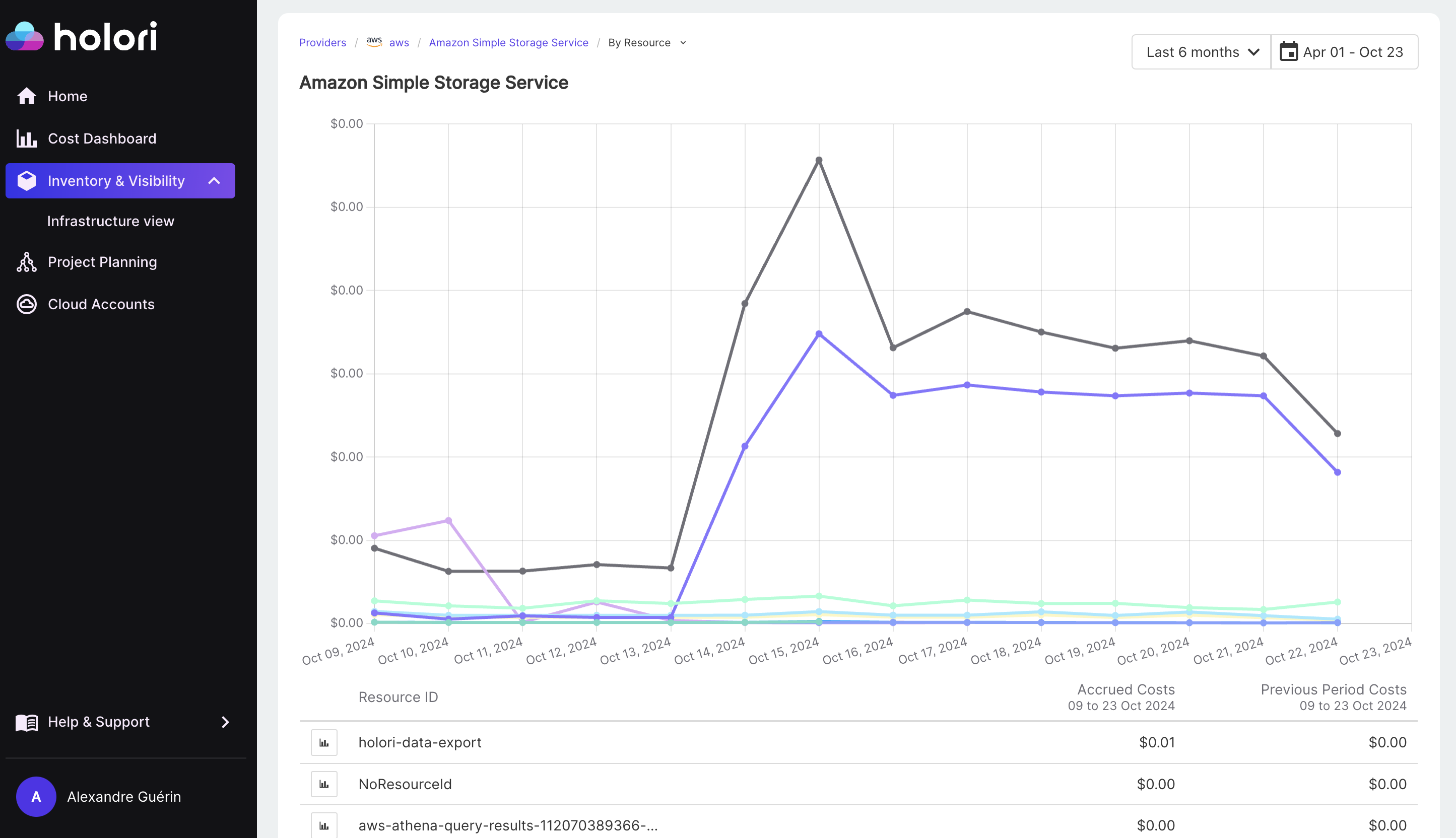

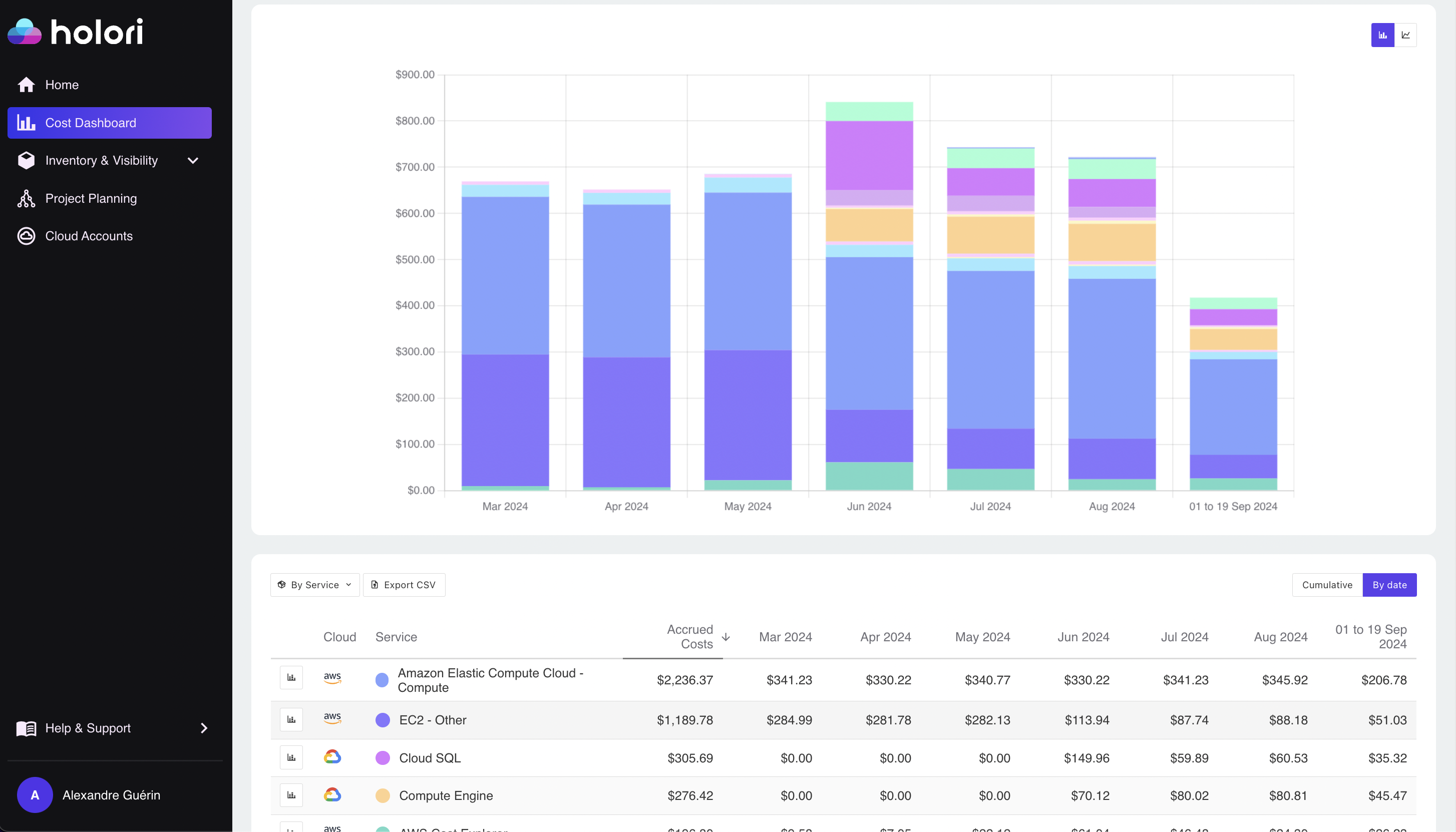

How Holori Can Help Visualize and Optimize Your SageMaker Costs

Holori is a modern cloud cost management platform that provides an intuitive interface for tracking and managing AWS costs, including those related to SageMaker. With Holori, you gain access to visually engaging dashboards and comprehensive cost analytics tailored for cloud infrastructure. Holori excels in creating detailed infrastructure diagrams, allowing you to see exactly how your SageMaker resources are interconnected and where costs are accumulating. By pinpointing high-cost areas and analyzing resource utilization, Holori helps you identify potential inefficiencies and optimize your SageMaker environment.

Holori delivers targeted recommendations for optimizing SageMaker costs, including precise instance rightsizing, suggestions for suitable Savings Plans, and alerts to delete unused SageMaker resources. By providing actionable insights, Holori ensures that your machine learning infrastructure is both cost-effective and well-optimized, helping you eliminate unnecessary expenses and fully utilize available resources. Furthermore, its easy-to-use cost forecasting and budgeting features empower you to plan for future expenses, making it an ideal tool for controlling and reducing SageMaker costs.

Conclusion

AWS SageMaker is a powerful platform for building, training, and deploying machine learning models, allowing organizations to harness the capabilities of AI at scale. However, costs can quickly spiral if not managed carefully. AWS Sagemaker pricing is very complicated but by choosing the right instance types, leveraging cost-saving tools, and optimizing storage and inference methods, you can maximize the potential of AI without overspending.

Whether you’re a data scientist, ML engineer, or IT manager, keeping a close eye on AWS SageMaker’s pricing elements and implementing these cost optimization strategies will help you fully realize the benefits of AI while minimizing cloud expenses. Start small, scale wisely, and stay updated with AWS’s latest offerings and pricing updates to ensure your AI-driven workloads remain both efficient and cost-effective.

Try Holori cloud cost management platform now to optimize your SageMaker costs (14 days Free trial): https://app.holori.com/.